For years I've been told that it is not possible to mix in DSD—that this is one of the major limitations of recording in DSD. Well, I'm now learning from Tom Caulfield, mastering engineer for NativeDSD, and Gonzalo Noqué, owner and recording engineer at Eudora Records and Noqué Studio, that this is no longer true. Proof of point is this marvelous recent release from Eudora, Lost in Venice, over which I enthused in a recent review (HERE).

Lost in Venice, Infermi d'Amore, Vadym Makarenko (including four world premiere recordings). Eudora 2022 (Pure DSD256) (HERE)

Eudora has now released two further albums in Pure DSD256 that are very striking in the transparency of their sound:

J.S. Bach Complete Sonatas for Violin and Harpsichord, Andoni Mercero and Alfonso Sebastian. Eudora Records 2022 (Pure DSD256) (HERE)

String Quartets of Haydn, Almeida, and Beethoven, Protean Quartet. Eudora Records 2022 (Pure DSD256) (HERE)

In an email exchange with Gonzalo, he told me that one of the challenges in recording the Lost in Venice album was the venue. It was simply not acoustically ideal and he had to work a lot with his microphones to get the sound he hoped to achieve. He did—in spades! As I wrote in my review, "I think this is perhaps the finest recording I've yet heard from his hands..."

Gonzalo recollects that he used 12 microphones to capture the stereo release: main pair, room pair and spot microphones. Tom Caulfield at NativeDSD then mixed the 12 microphone channels into the final stereo release via Signalyst HQPlayer Pro per the mixing instructions Gonzalo provided.

But this is a PURE DSD256 release! How did Gonzalo and Tom manage to mix the various microphone channels and retain everything in DSD?

I contacted Gonzalo to see if he could share more about the process to create the mixing instructions that then allowed Tom to do the final mastering while staying entirely in DSD256. It is a fascinating approach that Gonzalo believes any recording/mastering engineer could apply to recordings with a manageable number of microphones.

Gonzalo Noqué setting up for a recording session from several years ago.

Rushton Paul: I understand that the final release of this album at NativeDSD was created entirely in DSD, is this correct?

Gonzalo Noqué: That is correct. The Lost in Venice recording sold on NativeDSD is Pure DSD256 mixed entirely in the DSD domain from a total of 12 microphone tracking channels. Tom and I accomplished this as a collaboration. I created the mixing instructions, and Tom used Signalyst HQPlayer Pro and the mixing instructions I provided so that the album stayed completely in DSD256.

Can you explain further? As I've understood it is not possible to mix channels in DSD?

Well, the original Sony Sonoma workstation did allow people to mix tracking channels in DSD64, but not at a higher modulation. So, as DSD modulation moved to DSD256, to release an album in DSD meant either mixing in analog before the Analog-to-Digital converter, or by using Merging Technologies' Pyramix Digital Audio Workstation to mix in DXD and then output those DXD post-processed files to DSD using Pyramix's album publishing function.

Over the past several years, Tom and NativeDSD have worked with Jussi Laako at Signalyst to use Jussi's HQPlayer Pro (HQP) software to mix DSD tracking channels. The mixing is done in HQP through a process called "modulation." This process keeps the signal entirely in the DSD domain. The only problem is that HQP is not a digital audio workstation.

If HQP does not have a digital audio workstation function, how did you determine the mixing instructions?

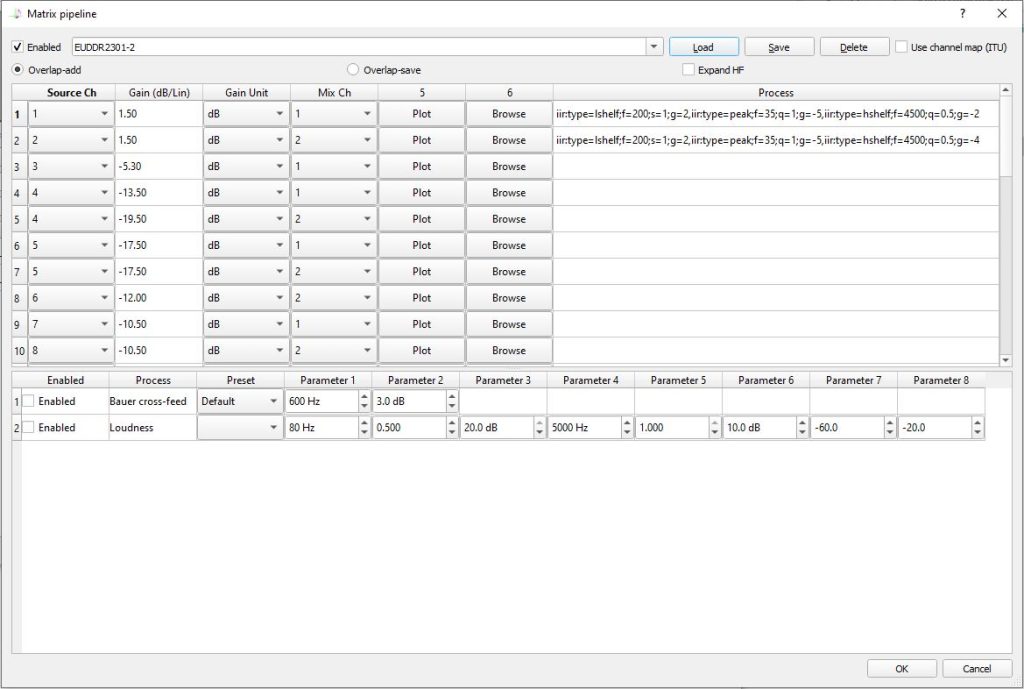

A DAW works with visual knobs, faders and settings that make the process of mixing "user friendly" and mimic what an analog mixer looks like. HQP, on the other hand, features a matrix processing (fig. 1), essentially a command line "channel in—channel out" pipeline. Each line tells the software what to do with the original channel and where to go to the resulting mixed file channel mapping.

Fig. 1

Let's see an typical example, the one you would typically use for a spot microphone channel to be added to a stereo mix. In Pyramix, if you want that channel to be "placed" in the center of the image, you would just set the knob in the center (fig. 2), which means that internally Pyramix sends that source channel's audio to both left and right output channels with a -3dB attenuation to avoid clipping (sending them without an attenuation would cause clipping as the sum would exceed 0dB). Sending the same sound to both left and right channels, as readers will know, will be processed by the brain as a sound located in the center.

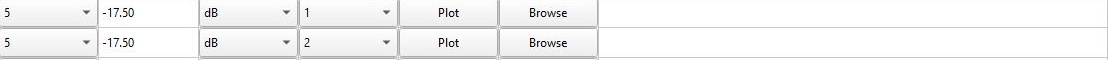

Fig. 2 (shows a -17.5dB gain as shown below in Fig. 3)

If you want to achieve that within HQP, you have to send that source channel twice (fig. 3): one to the output left channel with a -3dB gain, and another one for the right output channel, with the same -3dB gain.

Fig. 3 (shows a -17.5dB gain, as used for these particular tracking channels being mixed in)

Now, if you're placing any channel "anywhere" between the center and hard left or right, things get tricky. Merging does not fully know anymore the exact Pyramix "pan law" outside center or hard left or right, so I've just made my own listening tests and come up with my own Pyramix to HQP "pan conversion law," so to speak.

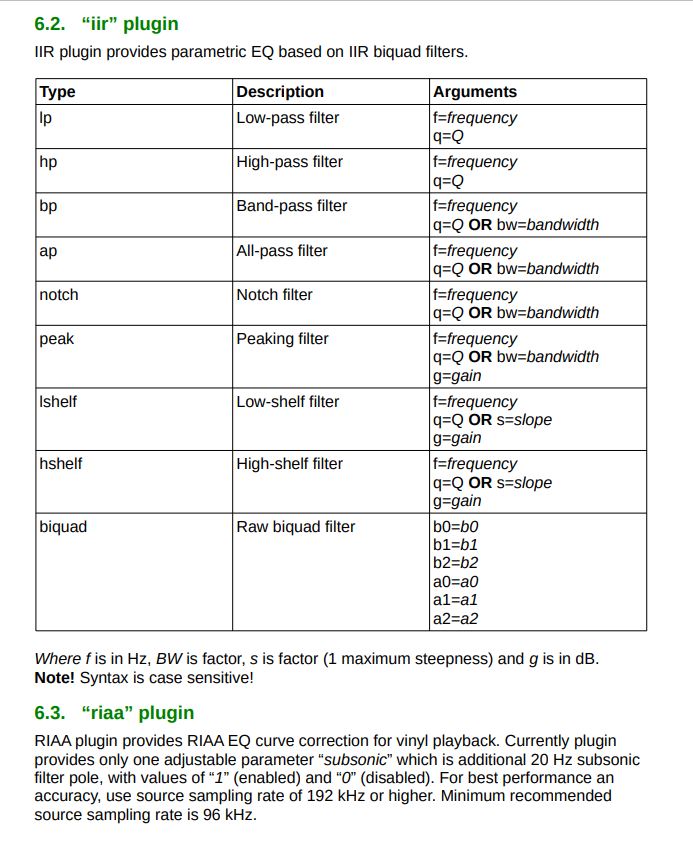

Lastly, HQP allows for equalization (EQ), with specific command line instructions (fig. 4). In this case, going from Pyramix to HQP is easier, as HQP features a graphic plot that represents what you've already set in the written instructions (fig. 5).

Fig. 4

Fig. 5

Why do you go to this additional work rather than simply using the DSD output from the DXD post processed file that you can get from Pyramix?

Going this route is definitively not very practical and still pretty limiting compared to the DXD path within Pyramix. But to my ears, it delivers what in my opinion is the best digital sound achievable today when recording Classical music recorded in real acoustics. So, in my mind, I can only think: "Why wouldn't I do it?" Whether the difference is large or small, noticeable by many or few, doesn't really matter.

Do you have any suggestion for recordists who would like to sample the difference in sound quality?

Well, in order to fully appreciate the difference, I think the best option for him or her is to record in DSD256, mix in DXD within Pyramix and also doing it in HQP, and then compare the resulting files. I think that any recording engineer who's spent time listening to the subtle differences between microphones, choosing between them and refining the equipment to find the best sound, trying different recording techniques and developing those listening skills, will find that this route I chose delivers a finesse and a textural and harmonic palette that is worth the effort.

Thank you for sharing so much about what you are doing with your recordings. I appreciate all the time you've given me. (End of conversation.)

Free Download of DSD Mixing Sample Files

There is no better way to understand the difference in sound quality to which Gonzalo refers than to hear it for yourself. To this end, Gonzalo agreed to make available to Positive Feedback readers three pairs of sample files from three different Eudora albums, including a string quartet, a violin concerto, and a violin and harpsichord duo. In each file, the data comes from the same DSD256 microphone tracking channels mixed either a) via a DXD project in Pyramix or b) via a Pure DSD modulation in HQPlayer Pro. These sample files are free to download.

Noqué Studio DXD Sample Mixing Files - download by clicking on this link

I asked Gonzalo to describe what he hears in these samples and he said:

"All three samples are period instruments. It's difficult to put into words the differences I feel/hear. But, in my view there's a feeling of easiness and finesse with the DSD256 mixed with HQPlayer. The DXD processed files feel a bit closer and tense in comparison, while the HQPlayer mixed files have a more refined and organic sound, with the relationship with the acoustics of the recording venue better felt. I hope the article does not give the idea that I am a kind of DSD evangelist trying to convince people, as this is something very far from my nature. Acoustics, choice of microphone and placement of them is what makes a recording really good. The DSD processed in the Pure DSD domain is the icing on the cake. Please, have a listen to the samples and let me know what you feel/hear."

I also asked my listening partner, Ann, to lend an ear to this evaluation. We played the files together and we both had exactly the same conclusion across all six files. As Ann immediately said: The Pure DSD files are cleaner, they have more detail, they're more open, and they have a greater sense of air.

Yep, I agree. And what she describes is consistent across all the samples. Is it a dramatic difference? No. But it is a clearly audible difference. It is also a consistent difference across all the many Pure DSD recordings to which I've listened.

Am I happy listening to DXD? Oh, certainly. But, the Pure DSD is simply that bit better sonically. Remember, I'm the past vinylholic who went out of my way for over forty years to seek out the better LP pressing, the better mastering, the 45rpm release because it sounded closer to the master tape.

Digital is the same: there is good, and there is better. And the better your digital playback system is, the more easily you hear the difference. Just like in my days of pursing vinyl playback.

So, I hope you will find the time to listen to the file samples Gonzalo has provided. Also, I encourage you to spend some time listening to the Pure DSD recordings being released by Eudora, Cobra, Hunnia, Just Listen, and other labels. You may find it difficult to go back.

And, for those of you reading this who may, perhaps, make your own recordings, I hope you will experiment with keeping your recordings in the Pure DSD domain to see what you think of the sound quality you get. Tom Caulfield suggested a simple experiment: take your primary stereo microphone channel tracks from a DSD256 recording and play them without any other mixing or processing. Now take those two stereo channel tracks and convert them to DXD, as you would for typical post processing. Don't do any post processing, simply publish/convert them back to DSD256. Now listen to the DXD processed DSD256 file and compare.

If you need to mix microphone tracking channels and you can afford to do so, by all means try out mixing in HQPlayer Pro via DSD modulation. Just keep in mind what Tom Caulfield advised when talking with me: keep to a single pass with all changes built into that one pass through the software. DSD modulation is just like analog tape: multiple generations eventually accumulate audible artifacts. Always go back to your source files and feed them in with all the changes you need to make. Never add a modulation on top of a modulation.

Taking a deeper dive into the weeds... What is happening when digitizing musical files that may underlie the sonic differences that Gonzalo and others are hearing in Pure DSD files?

I was curious about the technical differences when converting the file to DXD for post-processing versus keeping the file in Pure DSD. So I reached out to Tom Caulfield, mastering engineer for NativeDSD, to see if he could help me understand. Tom graciously agreed to talk me through what DSD really is, how it differs from DXD, and what happens as one moves a file from one to the other. (For an earlier article in which Tom discussed DSD with me in much greater technical detail, see that earlier article HERE.)

Tom Caulfield, mastering engineer for NativeDSD

What follows is a paraphrasing of what I think I've learned. Any errors or confusion are mine and no one else's. Let's dive in...

Format Conversions are Not Lossless.

First, the bottom-line reason for taking the extra effort to record and post process in DSD is that DSD is the closest digital format to the original analog microphone signal (further explanation below). By staying in DSD, one keeps that original signal as pure as possible. Every conversion to any other digital format after this initial conversion of the analog signal to DSD results in some unintended changes in the audio integrity. It is mathematically impossible to make later conversions without also introducing some artifacts. It is also mathematically impossible to reconvert without introducing yet additional artifacts. (It is not a "lossless" process.)

The original analog microphone signal is a continuously changing voltage level that is proportional to the sound pressure level (SPL) presented to the microphone. This voltage signal is converted into a digital format known as a 1-bit wide variant of Pulse Density Modulation (PDM), commonly known by the Sony/Philips marketing name "DSD."

By converting this initial PDM bitstream into DXD for post processing we unavoidably introduce greater artifact introducing opportunities. The question for the producer, recording engineer, mastering engineer is whether the added benefits of editing in DXD are needed (or worthwhile) to accomplish the desired finished product. In many cases, this conversion step to DXD is unavoidable for certain post production editing functions (e.g., EQ, adding reverb), but, as Tom and Gonzalo are demonstrating, no longer for mixing.

So why is the first digital conversion a PDM bitstream?

The reason is that in all A/D converters available today, and since the early 2000s, are front-ended with with Sigma-Delta modulators performing the actual analog signal to digital bitstream conversion. And Sigma-Delta modulators convert to PDM.

So what are these Sigma-Delta modulators doing?

In simplified terms, the analog signal from the microphone is a continuously changing voltage level that is proportional to the sound pressure level (SPL) presented to the microphone. The Sigma-Delta modulator creates a continuous stream of 1's and 0's bits whose ratio of 1's to 0's, or 0's to 1's is proportional to this voltage level signal. This resulting bitstream is Pulse Density Modulation. (Again, for a more detailed explanation of this, see the earlier article with Tom Caulfield, HERE.)

To obtain a DXD file (352.8kHz Pulse Code Modulation or PCM), the PDM bitstream is segmented into chunks through interpolation, and filtered to remove the high frequency alternating 1/0 bit carrier, producing digital samples that are signal level values expressed in a binary number digital form.

This PDM to PCM conversion is necessary for traditional direct binary computer math processing because a PDM bitstream contains no digital value information. Unfortunately, the conversion process of PDM to PCM is a lossy process, meaning that the resulting PCM samples have incurred losses such that the original PDM bitstream can not be recreated from it.

Translated: this means there's a loss of very fine sound resolution detail in the PDM to PCM conversion.

While a DSD bitstream is the interim first step in the audio analog to digital encoding process, on the path to producing PCM samples, it is also available to be stored and played back in a digital computer. And, it can be be post process channel mixed and EQ'd through an additional modulation step using Signalyst HQ Player Pro.

So, a summation of all this:

1) PDM is the first stage of A/D conversion and is virtually an analog signal itself;

2) the next stage of converting the PMD to PCM is lossy and cannot be recovered completely;

3) the losses in the PDM-to-PCM conversion are audible to some listeners; and

4) it is now possible to to mix microphone tracking channels and make volume adjustments while staying entirely in PDM, this is no longer a technical limitation for working in DSD even though some further signal manipulation like adding reverb is still not possible.

A reminder of why we care about Pure DSD256 recordings—it simply sounds better

When I collected vinyl, I always searched for the more transparent and revealing pressings. Often I was seeking out 45 rpm reissues where I could find them. Why? They sounded better. They sounding as close to the sound of the master tape as I felt I could ever get playing vinyl. Yes, these 45 rpm releases were more trouble to play given their short listening sides. Yes, they were more expensive. But, wow, they were dramatically worthwhile for our listening enjoyment.

So, why do I care about Pure DSD256? Same reason: it just sounds better.

Pure DSD256 is a purist pursuit. It continues a path we've been following for decades—always seeking the better sounding, more transparent, more true to what was picked up by the microphones recording of acoustic music performed in a real acoustic venue that we value. For us, today that better, more transparent, more true to the source recording is Pure DSD256. And, for us, it is worth the pursuit.

We are gratified that more and more recording labels (like Eudora, Cobra, Hunnia, Just Listen, Base2, Yarlung, Channel Classics) are willing to make the commitment to this pursuit where recording realities allow. Being able now to mix and EQ in Pure DSD makes this possible for more recording projects.

Update: Frequency EQ in DSD using HQPlayer Pro.

Gonzalo mentions doing EQ in HQP as part of his mixing process. See Fig. 4 and Fig. 5 above. Given some questions asked, I went back to confirm with Tom Caulfield that it is possible to frequency EQ with HQP while staying completely in the DSD domain. Tom replied:

Definitely yes. Attached is the manual page screenshot for iir filters. It's not a graphic user interface, like Pyramix, but an expression as shown at the page top. You see an example of this in your DSD article... These expressions are chained together for multiple poles filters, so if one can be designed, one can implement it in HQPlayer Pro.

Here's the manual page (click on image for larger full size):